Math is insanely important in our world. It will only get more so.

This week’s TBL looks at an interesting math problem with a straightforwardly obvious answer that is dead wrong. There are other examples, too. I trust you’ll have fun with them and won’t be offended when you’re wrong – and we’ll almost all be wrong, try to explain it away, and/or lie about it).

If you like The Better Letter, please subscribe, share it, and forward it widely. It’s free, there are no ads, and I never sell or give away email addresses.

A couple of you have reported that this email is going to your spam folder. If you’re in that camp, you may need to whitelist my email address.

Thanks for visiting.

Let’s Make a Deal

When I was a kid, television was three network stations and all in black-and-white. That meant the only fare offered when I stayed home from school sick was game shows, old network reruns, and soap operas.

One game show I liked was Let’s Make a Deal, the “marketplace of America.” The format involved members of the studio audience, who dressed in outrageous costumes to increase their chances of being selected as “traders,” making deals with the host. For decades, the host of the show was also its co-creator, Monty Hall, known as “America’s top trader, TV’s big dealer,” and for his signature, wheeler-dealer speaking cadence.

In most cases, Monty offered a trader something of value and provided a choice of whether to keep it or exchange it for a different item. The program’s defining element is that the other item remains hidden from the trader until that choice is made. The trader thus doesn’t know if s/he is getting something great or a “zonk,” a gag gift of little, if any, value.

For example, a woman might accept $50 in exchange for an envelope which held $1,000. Or a man might turn down $800 to keep a box that was revealed to contain the promise of a vacation trip … only to trade the trip and end up with paper towels.

Today and all weekdays, here in San Diego, the show airs at 9am on CBS. It is an hour-long show now and is hosted by Wayne Brady. He’s not identified as “America’s top trader,” but he is “America’s big dealer,” now.

So, suppose you were a contestant on Let’s Make a Deal, were shown three doors, and are told that behind one of them is a car while the other two conceal goats. You get to choose one of the three doors.

Without revealing what is behind the chosen door, suppose further that Monty Hall has Carol Merrill (I know Monty died at age 96 in 2017 and that Carol turns 81 next week and retired from the show a long time ago, but work with me here — they were on the show when I was a kid) open one of the other two doors, revealing a goat. Our host then provides the option of either sticking with the chosen door or switching to the other unopened door.

Which option should you choose to maximize your chances of winning the car?

This question is the “Monty Hall problem,” with the name coming from the title of an analysis in the journal American Statistician in 1976. It fools just about everyone. It has also become a part of popular culture (see, e.g., here, here and here). All other things being equal, we’re likely to stick with our original choice. The intuitively obvious answer — that it makes no difference whether you stick or switch — is incorrect.

Mathematically, there is a big advantage to be gained from switching doors when permitted to do so. You can prove it by simulation. The answer is thus a “veridical paradox” because the result appears odd but is demonstrably true, nonetheless. You can test it out by playing the game yourself here.

Why should you always switch to the other door?

If the car is initially equally likely to be behind each door, a player who picks Door #1 and doesn’t switch has a one-in-three chance of winning the car while a player who picks Door #1 but then switches has a two-in-three chance. Monty removed an incorrect option from the unchosen doors, so contestants who switch double their chances of winning the car. Put another way, when you stick with your original choice, you’ll win only if your original choice was correct, which happens only one-in-three times. If you switch, you’ll win whenever your original choice was wrong, which happens two-out-of-three times.

Even with this explanation, many refuse to believe that switching is beneficial. After the Monty Hall problem was described and the answer explained in Marilyn vos Savant’s “Ask Marilyn” column in Parade magazine in 1990, approximately 10,000 readers wrote in to claim that Marilyn was wrong. It’s a common response. Even when given explanations, simulations, and formal mathematical proofs, many people (including many mathematicians and scientists) still do not accept that switching is the best strategy (you can read several funny and arrogant responses from academics to Marilyn here).*

As celebrated columnist and mathematician Martin Gardner explained in 1959, quoting Charles Sanders Peirce, “in no other branch of mathematics is it so easy for experts to blunder as in probability theory.”

Contestants are subject to the endowment effect, whereby we tend to overvalue the winning probability of the already chosen – the already “owned” – door. We are subject to status quo bias, whereby we prefer existing conditions to change. And we generally prefer errors of omission to errors of commission. All other things being equal, we typically prefer to have bad things happen to us than to cause bad things to come down upon us.

People routinely get the Monty Hall problem wrong. They overanalyze and make errors. Pigeons do not. They don’t do the math, obviously. They are good, if unknowing empiricists, however. After “playing” a few times, they simply follow the weight of the evidence to get their reward and switch doors, winning twice as often.

The problem can get murkier because the host is in control of the game and can play with contestants’ minds – game theory! Implicit in the problem’s formulation is the idea that Monty knows where the car is – as in the real game – and will always have Carol open a door with a goat behind it after your choice. But he doesn’t really have to.

Monty can also exert psychological pressure to influence your decision.

Suppose your original choice (Door #1) is correct but, understanding the probabilities and not knowing you’re right, you decide to switch doors (to Door #2) when asked. Further suppose Monty then offers you money not to switch — perhaps a lot of money. Odds are you’ll still switch even though a bird in the hand should be worth two in the bush.

Note Monty’s explanation.

“Now do you see what happened there? The higher I got [the more money offered not to switch], the more you thought the car was behind Door 2. I wanted to con you into switching there, because I knew the car was behind 1. That’s the kind of thing I can do when I’m in control of the game. You may think you have probability going for you when you follow the answer in her column, but there’s the psychological factor to consider.

“…If the host is required to open a door all the time and offer you a switch, then you should take the switch,” he said. “But if he has the choice whether to allow a switch or not, beware. Caveat emptor. It all depends on his mood.

“My only advice is, if you can get me to offer you $5,000 not to open the door, take the money and go home.”

As with the markets, our psychological make-up tends to push us to “buy high” and “sell low” — to act against our own interests.

We are all prone to innumeracy, which is “the mathematical counterpart of illiteracy,” according to Douglas Hofstadter. It describes “a person’s inability to make sense of the numbers that run their lives.” While illiteracy mostly strikes the uneducated, we are all prone to innumeracy.

In the investment world, we intuitively tend to think that if we start with $1,000 and suffer a 50 percent loss on Day 1 but make 50 percent back on Day 2 (day-to-day volatility being exceptionally high), we’re back to even. However, were that to happen, our $1,000 would be reduced to a mere $750. Similarly, a sum of money growing at eight percent simple interest for ten years is the same as six percent (6.054 percent to be exact) compounded over that same period. Most of us have trouble thinking in those terms.

If you drove from city A to city B in one hour at 80mph, then back at 40mph, what was your average speed for the trip? If you say 60mph, you haven’t been paying attention.

Probability is particularly difficult for us, as noted, especially when the answer seems straightforward. To illustrate, if you have two children and one of them is a boy born on a Tuesday, what is the probability you have two boys? If you do not answer 13/27 or 0.481 — as opposed to the intuitive 1/2 – you’re wrong (to find out why go here**).

Persi Diaconis, a former professional magician who is now a Stanford University professor specializing in probability and statistics, explains it this way, “Our brains are just not wired to do probability problems very well….”

This issue is of particular import to investing since it always involves analyzing probabilities amidst uncertainty and Bayes’ theorem*** is the best mechanism we have for analyzing probability amidst uncertainty.

The inherent biases we suffer make matters worse. For example, we’re all prone to the gambler’s fallacy – we tend to think that randomness is somehow self-correcting (the idea that if a fair coin is fairly tossed nine times in a row and it comes up heads each time, tails is more likely on the tenth toss). However, as the commercials take pains to point out, past performance is not indicative of future results. On the tenth toss, the probability remains 50 percent.

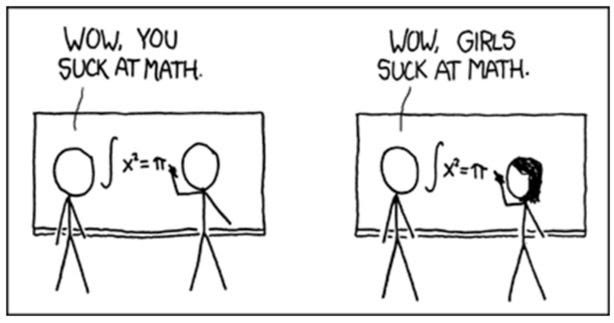

We also tend to suffer from availability bias and thus value our anecdotal experience over more comprehensive data (see the cartoon below, from XKCD). For example, the fact that most of your friends get pizza from Little Caesar’s is not sufficient evidence to conclude that it’s a good product (much less a good investment).

The conjunction fallacy is another common problem whereby we see the conjunction of two events as being more likely than either of the events individually. Consider the following typical example. A group of people was asked if it was more probable that Linda was a bank teller or a bank teller active in the feminist movement from the following.

“Linda is 31 years old, single, outspoken, and very bright. She majored in philosophy. As a student, she was deeply concerned with issues of discrimination and social justice, and also participated in anti-nuclear demonstrations.”

Fully 85 percent of respondents chose the latter, even though the probability of two things happening together can never be greater than that of the events occurring individually.

Now suppose that Company X has a workforce that is only 20 percent female. The base-rate fallacy would suggest that the company is discriminatory. But further analysis is required. If the applicant pool was only 10 percent female, Company X might actually have an exemplary record of hiring women. If you want to learn more in this area, you might start with this paper on teaching statistics.

In his famous 1974 Cal Tech commencement address, the great physicist Richard Feynman talked about the scientific method as the best means to achieve progress. Even so, notice what he emphasizes.

“The first principle is that you must not fool yourself – and you are the easiest person to fool.”

The examples above make Feynman’s point. It’s easy to fool ourselves, especially when we want to be fooled. We all really like to be right and have a vested interest in our supposed rightness. So let’s all make a deal. Let’s check our work and our biases very carefully all the time, as best we can. And ask for help. And question our assumptions. And doublecheck our work.

Those would make some pretty good New Year’s resolutions.

_______________

* Marilyn was also a victim of “mansplaining” (“You are the goat!”). Nobody complained about the problem and its solution when it was introduced in American Statistician in 1976, when a mathematically identical problem was proposed by French mathematician Joseph Bertrand in 1889, or when another one, called the three-prisoner game, was offered by Martin Gardner in 1959.

** This is a language problem, too.

*** Applying Bayes’ Rule, the car is equally likely to be behind any of the three doors initially: the odds are 1 : 1 : 1. Those odds remain after the trader has chosen door #1, by independence. The posterior odds on the location of the car, given that the host opens door #3, are equal to the prior odds multiplied by the Bayes factor or likelihood, which is, by definition, the probability of the new piece of information (the host opens door 3) under each of the hypotheses considered (the location of the car). Since the player initially chose door #1, the chance that the host opens door #3 is 50 percent if the car is behind door #1, 100 percent if the car is behind door #2, and zero if the car is behind door #3. Thus, the Bayes factor consists of the ratios ½ : 1 : 0 or, equivalently, 1 : 2 : 0, while the prior odds were 1 : 1 : 1. Therefore, the posterior odds become equal to the Bayes factor 1 : 2 : 0. Given that the host opened door #3, the probability that the car is behind door #3 is zero, and it is twice as likely to be behind door #2 as door #1.

Uh-Oh

Another conclusion to be drawn from the Monty Hall problem is obvious but also potentially significant as well as correct: Math can be crazy hard.

Some math problems are so hard they can’t be done, practically speaking. These are tasks so complex that they belong to a class of virtually unsolvable problems called “NP-hard.” Put enough elements in a math problem and it becomes unsolvable at the individual level because there isn’t enough time to do all the necessary computations.

That’s what the blockchain does for security purposes. It creates problems that are NP-hard unless you own the answer key.

There is one caveat, however. Improved technology can make an NP-hard problem solvable. A problem that was unsolvable on the abacus is solvable with a calculator. A problem that was unsolvable with a calculator is solvable with a CPU.

And here’s the rub: a problem that is unsolvable with a CPU might be solvable via quantum computing.

“It has been estimated that by 2035, there will exist a quantum computer capable of breaking the vital cryptographic scheme RSA2048. Blockchain technologies rely on cryptographic protocols for many of their essential sub-routines. Some of these protocols, but not all, are open to quantum attacks.”

That might turn out to be a fly in the ointment.

Totally Worth It

Your day is probably better.

This is the best thing I saw or read this week. The snarkiest. The creepiest. The craziest. The coolest. The sweetest. The saddest. The most despairing. The most delightful. The scariest. The awfulist. The most insightful. The loveliest. The most powerful. The most interesting. The most consistent. The most fun. The wowist. Good grief. Yikes. The best thread. The best interview. The most weirdly important.

Feel free to contact me via rpseawright [at] gmail [dot] com or on Twitter (@rpseawright) and let me know what you like, what you don’t like, what you’d like to see changed, and what you’d add. Praise, condemnation, and feedback are always welcome.

Don’t forget to subscribe and share TBL. Please.

Of course, the easiest way to share TBL is simply to forward it to a few dozen of your closest friends.

Please send me your nominees for this space to rpseawright [at] gmail [dot] com or via Twitter (@rpseawright).

The Spotify playlist of TBL music now includes more than 225 songs and about 16 hours of great music. I urge you to listen in, sing along, and turn the volume up.

Benediction

To those of us prone to wander, to those who are broken, to those who flee and fight in fear – which is every last lost one of us – there is a faith that offers hope. And may love have the last word. Now and forever. Amen.

Thanks for reading.

Issue 97 (January 14, 2022)