Fool's Errand

We can't predict market action for human reasons and for structural reasons, too.

Welcome (or welcome back) to TBL.

Would-be market influencers often predict market crashes. That’s because fear can be a powerful motivator and “everyone’s got a mortgage to pay.”

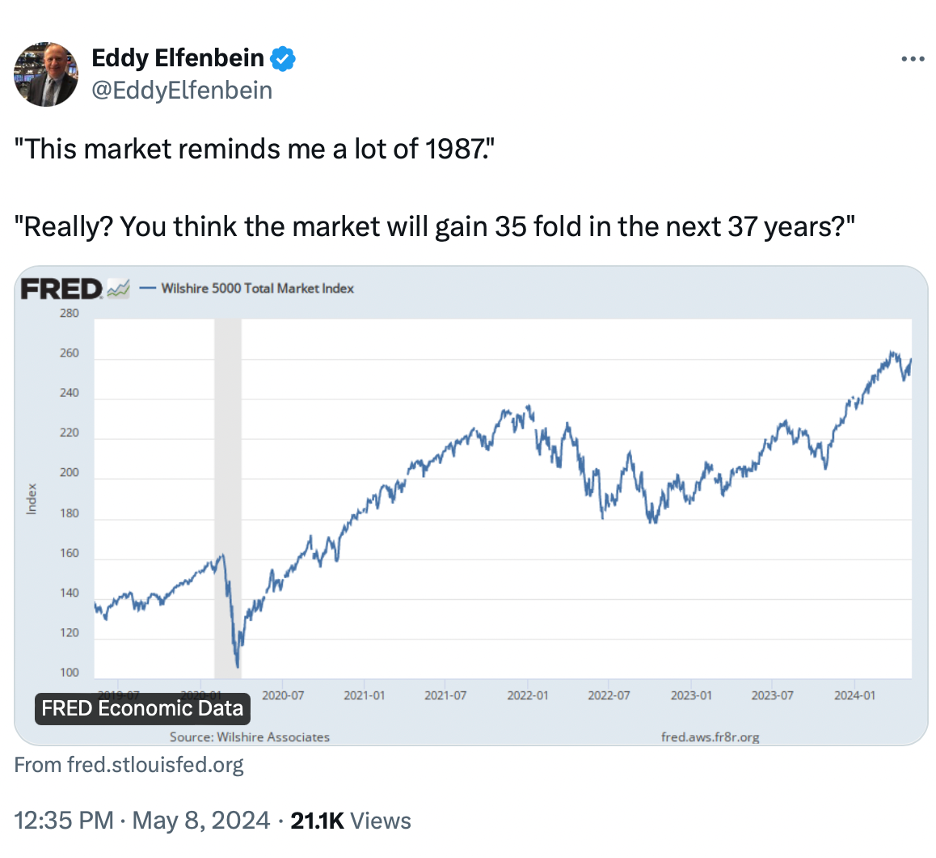

However, as Eddy Elfenbein suggests above, we don’t understand or predict what will happen in the markets very well. Why that is so is the subject of this edition of TBL.

If you’re new here, check out these TBL “greatest hits” below.

If you like The Better Letter, please subscribe, share it, and forward it widely. Please.

NOTE: Some email services may truncate TBL. If so, or if you’d prefer, you can read it all here. If it is clipped, you can also click on “View entire message” and you’ll be able to view the entire post in your email app.

Thanks for visiting.

Complexity, Chaos, and Chance

It was on a Monday, almost 37 years ago now, that the stock market suffered its worst day ever. I was still practicing law then. As the news filtered down at the office (somebody had been out and heard the news on a car radio), the attorneys at the firm began to talk about what was going on. As the day progressed and the news got worse, we talked less and less. A senior partner brought out a tiny black and white television to watch the network news coverage, which had broken into the day’s typical programming of reruns, game shows, and soap operas.

We huddled around the TV and watched. Feeling powerless. Quiet.

It was Black Monday: October 19, 1987. Coverage by The Wall Street Journal the next day began simply and powerfully. “The stock market crashed yesterday.”

After five days of intensifying stock market declines, the Dow Jones Industrial Average lost an astonishing 22.6 percent of its value that day. For its part, the S&P 500 fell 20.4 percent. Black Monday’s losses far exceeded the 12.8 percent decline of 1929’s Black Monday, October 28, 1929, the onset of the Great Depression.

It was “the worst market I’ve ever seen,” said John J. Phelan, Chairman of the New York Stock Exchange, and “as close to financial meltdown as I’d ever want to see.” Intel CEO Andrew Grove said, “It’s a little like a theater where someone yells ‘Fire!’”

A closer look at the coverage and its aftermath is revealing, especially for what is missing. There is no “smoking gun.” The Fed later identified “a handful of likely causes,” none dispositive and none well evidenced. Then Fed Chair Alan Greenspan has written a lot about Black Monday and about what he did, but he has never tried to explain why it happened.

According to the Big Board’s Chairman, at least five factors contributed to the record decline: the market’s having gone five years without a major correction; general inflation fears; rising interest rates; conflict with Iran; and the volatility caused by “derivative instruments” such as stock-index options and futures. Although it became a major part of the later narrative, Phelan specifically declined to blame the crash on program trading alone.

The Brady Commission, appointed by President Reagan to provide an official explanation, pointed to alleged causes like the high merchandise trade deficit of that era, and to a tax proposal that might have made some corporate takeovers less likely.

There is precious little evidence to support any of these claims.

Survey evidence accumulated from traders by Nobel laureate Robert Shiller confirmed generally that traders were selling simply because other traders were selling. It was classic herd-behavior and, essentially, a self-reinforcing selling panic. As Shiller explained, Black Monday “was a climax of disturbing narratives. It became a day of fast reactions amid a mood of extreme crisis in which it seemed that no one knew what was going on.”

Here’s the bottom line: the Black Monday collapse had no definitive (or even clear) trigger. The market dropped by almost a quarter for no apparent reason. So much for the idea that the stock market is efficient.

This shouldn’t be news. As Shiller has demonstrated, “The U.S. stock market ups and downs over the past century have made virtually no sense ex post. It is curious how little known this simple fact is.”

Black Monday was wholly consistent with catastrophes of various sorts in the natural world and in society. Wildfires, fragile power grids, mismanaged telecommunication systems, global terrorist movements, migrating viruses, volatile markets, and the weather are all complex systems that evolve to a state of criticality. Upon reaching the critical state, these systems then become subject to cascades, rapid downturns in complexity from which they may recover but which will be experienced again repeatedly.

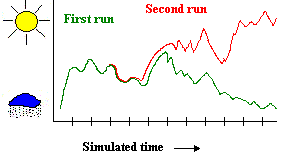

It sometimes ends up looking a lot like this.

For the markets, this means that we should not expect ever to be able to identify the trigger of any correction or crash in advance or to be able to predict such an event with any degree of specificity, no matter how high interest rates go or how overvalued the stock market is. It probably won’t be, because markets generally go up, for very good fundamental reasons, but tomorrow could be a day that the market crashes. It could even be today! That said, you shouldn’t expect to see it coming, and you may not even be able to explain it afterwards.

Let’s dig a bit deeper for the why.

Information is cheap, but meaning is expensive, as I often say. That wasn’t always the case.

For most of its existence, the investment business was driven by information. Those who had it hoarded and guarded it. They tended to dominate the markets too, because good information could be so very hard to come by. But the inexorable march of time as well as the growth and development of information technology has changed the nature of the investment “game” dramatically. Success in the markets today is driven by analysis – in other words, meaning.

Today, and unlike the past, most of us have access to the same information. What it means has now taken center stage.

And perhaps the key bit of meaning to be had is that it is incredibly hard to beat the market.

As NYU’s Clay Shirky has pointed out (using Dan Sharber’s Explaining Culture for his starting point), culture is “the residue of the epidemic spread of ideas.” These ideas are, themselves, overlapping sets of interpersonal interactions and transactions. This network allows for ideas to be tested, adapted, and adopted, expanded and developed, grown and killed off – all based upon how things play out and turn out. Some ideas receive broad acceptance and application. Others are only used within a small subculture. Some fail utterly. All tend to ebb and flow.

This explanation of culture seems directly applicable to the markets, especially since culture itself is best seen as a series of transactions – a market of sorts. If I’m right, understanding the markets is really a network analysis project and is (or should be) driven by empirical questions about how widespread, detailed, and coherent the specific ideas at issue – the analysis and application of information (“meaning”) – ultimately turn out to be.

Because there is no overarching “container” for culture (or, in my interpretation, the markets), creating (or even discovering) anything like “rules” of universal application is not to be expected generally, especially where human behavior is part of the game. That would explain why actual reductionism – lots of effects from few causes – is so rare in real life. Thus, seemingly inconsistent ideas like the general correlation of risk and reward on one hand and the low volatility anomaly on the other can coexist. Perhaps more and better information and analysis will suggest an explanation, but perhaps not, and we’ll never be able to explain it all.

“Einstein said he could never understand it all.”

As information increases – within given markets and sub-markets or within the market as a whole – so will various efficiencies. Therefore, over time, various approaches and advantages, as the ideas that generate them, will appear and disappear, ebb and flow. Trades get crowded. What works today may not continue to work. As the speed of information increases, so will the speed of market change.

Markets – like culture – are “asynchronous network[s] of replication, ideas turning into expressions which turn into other, related ideas.” Some persist over long periods of time while others are fleeting. But how long they persist remains always open to new information and better analysis.

In today’s world, with more and more information available faster and faster, it’s easy to postulate that market advantages will be harder to come by and more difficult to maintain. But an alternate (and I think better if not altogether inconsistent) idea is that the ongoing growth and glut of data will make the useful interpretation of that data more difficult and more valuable.

The ultimate arbiter of investment success, of course, is largely empirical. Obviously, we should gravitate toward what works, no matter our pre-conceived notions. No matter how elegant or intuitive the proposed idea, only results ultimately matter. In our information rich world, those who can extract and apply meaning from all the reams of available information will be in ever-increasing demand. Those who cannot, should and will fall by the wayside.

Typical thinking – thinking that should be cast to the dustbin of history – fails to grasp the complexity and dynamic nature of financial markets. And their unpredictability.

This is a good point to reiterate Mark Newfield’s admonition: The Forecasters’ Hall of Fame has zero members.

On our best days, wearing the right sort of spectacles, squinting and tilting our heads just so, we can be observant, efficient, loyal, assertive truth-tellers. However, on most days, all too much of the time, we’re delusional, lazy, partisan, arrogant confabulators. It’s an unfortunate reality, but reality nonetheless.

But that’s hardly the whole story.

Despite our best efforts to make it predicable and manageable, and even if we were great forecasters, the world is too immensely complex, chaotic, and chance-ridden for us to do it well. We are our own worst enemy, but there are other enemies, too.

Complexity

“Too large a proportion of recent ‘mathematical’ economics are mere concoctions, as imprecise as the initial assumptions they rest on, which allow the author to lose sight of the complexities and interdependencies of the real world in a maze of pretentious and unhelpful symbols.” (John Maynard Keynes)

The world we live in is infinitely complex. As NASA’s Gavin Schmidt explained, “[w]hether you are interested in the functioning of a cell, the ecosystem in Amazonia, the climate of the Earth or the solar dynamo, almost all of the systems and their impacts on our lives are complex and multi-faceted.” Similarly, the interworkings and interrelationships of the markets are really complex. It is natural for us to ask simple questions about complex things, and many of our greatest insights have come from the profound examination of such simple questions. However, as Schmidt emphasized, referencing The Hitchhiker's Guide to the Galaxy, “the answers that have come back are never as simple. The answer in the real world is never ‘42’.”

The great Russian novelist Leo Tolstoy got to the heart of the matter when he asked, in the opening paragraphs of Book Nine of War and Peace: “When an apple has ripened and falls, why does it fall? Because of its attraction to the earth, because its stalk withers, because it is dried by the sun, because it grows heavier, because the wind shakes it, or because the boy standing below wants to eat it?” With almost no additional effort, today’s scientists could expand this list almost infinitely.

A system is deemed complex when it is composed of many parts that interconnect in intricate ways. A system presents dynamic complexity when cause and effect are subtle, over time. Thus, complex systems may exhibit dramatically different effects in the short-run and the long-run and dramatically different effects locally as compared with other parts of the system. Obvious interventions to such systems may produce non-obvious – indeed, wildly surprising – consequences.

In other words, a system is complex when it is composed of a group of related units (sub-systems), for which the degree and nature of the relationships is imperfectly known. The system’s resulting emergent behavior, in the aggregate, is difficult – perhaps impossible – to predict, even when sub-system behavior is readily predictable.

Complexity theory, therefore, attempts to reconcile the unpredictability of non-linear dynamic systems with our sense (and in most cases the reality) of underlying order and structure. Its implications include the impossibility of long-range planning despite a pattern of short-term predictability together with highly dramatic and unexpected change. Contrary to classical economic theory, complexity theory demonstrates that the economy is not a system in equilibrium, but rather a system in motion, perpetually constructing itself anew.

The financial markets are simply too complex and too adaptive to be readily predicted.1

Just as the King thought (albeit wrongly) about Mozart’s music containing “too many notes” in Amadeus, there are too many variables to predict market behavior with any degree of detail, consistency, or competence. Your crystal ball almost certainly does not work any better than anyone else’s.

But the problem extends further up and farther in. As CalTech systems scientist John C. Doyle has established, a wide variety of systems, both natural and man-made, are robust in the face of large changes in environment and system components, and yet they are still potentially fragile to even small perturbations. Such “robust yet fragile” networks are ubiquitous in our world. They are “robust” in that small shocks do not typically spread very far in the system. However, since they are “fragile,” a tiny adverse event can bring down the entire system.

Such systems are efficiently fine-tuned and thus appear almost boringly robust despite the potential for major perturbations and fluctuations. As a consequence, systemic complexity and fragility are largely hidden, often revealed only by rare catastrophic failures. Modern institutions and technologies facilitate robustness and efficiency, but they also enable catastrophes on a scale unimaginable without them – from network and market crashes to war, epidemics, and climate change.

While there are benefits to complexity as it empowers globalization, interconnectedness, and technological advance, there are unforeseen and sometimes unforeseeable yet potentially catastrophic consequences, too. Higher and higher levels of complexity mean that we live in an age of inherent and, according to the science, increasing surprise and disruption. The rare (but growing less rare) high impact, low-frequency disruptions are simply part of systems that are increasingly fragile and susceptible to sudden, spectacular collapse.

Like Black Monday.

John Casti’s X-Events even argues that today’s advanced and overly complex systems and societies have grown highly vulnerable to extreme events that may ultimately result in the collapse of our civilization. Examples could include a global internet, AI or other technological collapse, transnational economic meltdown, or even robot uprisings.

We are thus almost literally (modifying Andrew Zolli‘s telling phrase slightly) tap dancing in a minefield. Not only is the future opaque to us, we also don’t quite know when our next step is going to result in a monumental explosion.

Chaos

“Chaos is a friend of mine.” (Bob Dylan)

Over 60 years ago, Edward Lorenz created an algorithmic computer weather model at MIT. During the winter of 1961, Lorenz was running a series of weather simulations using this computer model when he decided to repeat one of them over a longer period. To save time (the computer and the model were primitive by today’s standards – those who reserved computer time in the middle of the night while holding punch cards will remember – he started the new run in the middle, typing in numbers from the first run for the initial conditions, assuming the model would provide the same results as the prior run and then go on from there.

Instead, the two weather trajectories diverged wildly.

After ruling out computer error, Lorenz realized that he had not entered the initial conditions for the second run exactly. His computer stored numbers to an accuracy of six decimal places but printed the results to three decimal places to save space. Thus, Lorenz started the second run with the number 0.506; the original run began with 0.506127. Subsequent numbers has been similarly abbreviated. Astonishingly, those tiny discrepancies altered the result enormously.

Accordingly, within complex adaptive systems, a tiny change in initial conditions can provoke monumental changes in outcome. This finding (even using a highly simplified model and confirmed by further testing) demonstrated that linear theory, the prevailing system theory at the time, could not explain what he had observed.

We tend to assume that whether a particular stoplight is red or green at a given time shouldn’t change our expectation for how long our commute to work is going to take. But it can and often does – sometimes enormously.

Chaos isn’t necessarily a bad thing, the Joker notwithstanding.

But, it needn’t be an opportunity, either.

It just is.

Lorenz built upon the work of late 19th century mathematician Henri Poincaré, who demonstrated that the movements of as few as three heavenly bodies are hopelessly complex and impossible to calculate, even though the underlying equations of motion seem simple (the so-called “three-body problem”). He was thus able to make the otherwise counterintuitive leap to the conclusion that highly complex systems are not ultimately predictable. This phenomenon (“sensitive dependence”) came to be called the “butterfly effect,” in that a butterfly flapping its wings in Brazil might set off a tornado in Texas.

Accordingly and, at best, complex systems allow only for probabilistic forecasts with significant margins for error and often seemingly outlandish and hugely divergent potential outcomes. We can generally accept mild outcome differences in our models, forecasts, and expectations. But the outcomes Lorenz discovered are another matter altogether. That’s because, per Lorenz, chaos is “[w]hen the present determines the future, but the approximate present does not approximately determine the future.”

Financial markets exhibit the kinds of behaviors that might be predicted by chaos theory (and the related catastrophe theory). They are dynamic, non-linear, and highly sensitive to initial conditions. As shown above, even tiny differences in initial conditions or infinitesimal changes to current, seemingly stable conditions, can result in monumentally different outcomes. Thus, markets respond like systems ordered along the lines of self-organizing criticality – unstable, fragile, and largely unpredictable – at the border of stability and chaos.

Traditional economics has generally failed to grasp the inherent complexity and dynamic nature of the financial markets, which (utterly chaotic) reality goes a long ways towards providing a decent explanation for Black Monday, the 2008-2009 real estate meltdown, and financial crisis that seem inevitable in retrospect but were predicted by almost nobody.

Migrating viruses, wildfires, power grids, telecommunication systems, global terrorist movements, markets, the weather, and traffic systems (among many others) are all self-organizing, complex systems that evolve to states of criticality. Upon reaching a critical state, these systems then become subject to cascades, rapid down-turns in complexity from which they may recover but which may be experienced again repeatedly.

Chaos theory only became formalized, following Lorenz, in the second half of the 20th Century. What had been attributed to an imprecision in measurement or unexplained “noise” was considered by chaos theorists as a full component of the studied systems.

Storytellers, on the other hand, have understood the fundamentals of chaos theory – without calling it that or grasping the mathematical details – for millennia (since at least Herodotus’s Histories, in the 5th Century BC). Whether we’re talking about alternate history, time travel and other science fiction stories, fantasy, video games, literary devices, or speculative history, artists have long recognized that tiny changes of fact can have enormous and far-reaching consequences.

Ringo Starr described how he came to be part of the biggest band the world has ever seen as his version of “sliding doors.”

Ironically, while chaos theory shows that a small change in initial conditions can dramatically change outcomes, what I call the stasis hypothesis holds that even enormous changes in initial conditions often don’t change outcomes very much at all. That’s because, no matter how good the opportunity, the plan, or the operation, humans are always a wildcard.

For example, there is a paranoid paradox of espionage, almost universally true, that the better the intelligence, the stupider the use of it. Again and again, history shows that “those with the keenest spies, the most thorough decryptions of enemy code, and the best flow of intelligence about their opponents, have the most confounding fates. Hard-won information is ignored or wildly misinterpreted. It’s remarkably hard to find cases where a single stolen piece of information changed the course of a key battle.”

Accordingly, even though a Union corporal from Indiana found the Confederate battle plan at Antietam wrapped around three cigars and quickly transmitted the fortuitous finding to the powers-that-be, it didn’t help all that much. George McClellan did not become a better commander with the added information and twice the manpower of his Confederate enemy. He still had “the slows” and a decisive Union victory would not come until nearly a year later at Gettysburg, with McClellan in forced retirement, lookin to run for president and oust Lincoln. In other words, our “intelligence” isn’t any more intelligent than we are.

Chaos theory makes things far less predictable than we assume. Our general inability to make sense of what is right in front of us makes reality even more opaque.

But that’s not the story we tell ourselves, is it?

Chance

“I returned, and saw under the sun, that the race is not to the swift, nor the battle to the strong, neither yet bread to the wise, nor yet riches to men of understanding, nor yet favour to men of skill; but time and chance happeneth to them all.” (Ecclesiastes 9:11)

On a fine Sunday morning 110 summers ago in Sarajevo, an automobile driver ferrying the Austro-Hungarian Archduke Franz Ferdinand and his wife made a wrong turn off the main street into a narrow passageway and came to a stop directly in front of a teenaged activist member of the terrorist organization, Young Bosnia. Gavrilo Princip drew his pistol and fired twice. The archduke and his wife fell dead. Within hours, World War I was well on its way to seeming inevitable (or at least necessary), precipitated by the randomness of a wrong turn.

In related news, the idea that history is rational and sheds light on an intelligible story, much less a story of inevitable and inexorable advance, was also shot dead, as dead as the archduke himself.

Austria invaded Serbia in response to the assassination. Russia had guaranteed protection for the Serbs via treaty and duly responded. Germany, also by treaty, was committed to support Austria when Russia intervened. France was bound to Russia by treaty, causing Germany to invade neutral Belgium to reach Paris by the shortest possible route. Great Britain then entered the fray to support Belgium and, to a lesser extent, France. From there, the entire situation went completely to hell.

Alleged experts on both sides expected a quick outcome. “Over by Christmas” was the consensus view, even though the expected prevailing parties remained in dispute. Forecasters then were no better than today’s iteration, obviously. The “absolute neutrality” of the United States lasted until the spring of 1917 when Germany’s unrestricted submarine warfare policy caused too much damage to U.S. interests to be ignored.

By 1919, after the war’s end, 10 million had died in addition to the archduke and his wife, even as the seeds of Nazism and the Second World War were sown. Western civilization, previously so full of optimism and fairly broad prosperity, had drawn itself into a very tight circle and begun blasting away at each other from very close range for no very good reason.

Suffice it to say, we readily and routinely overestimate the degree of predictability in complex systems and underestimate the degree of randomness. In other words, past performance is not indicative of future results.

The world is far more random than we’d like to think. Indeed, the world is far more random than we can imagine.

Even worse, our economic and market models typically assume a “mild randomness” of market fluctuations. In reality, what visionary mathematician Benoît Mandelbrot called “wild randomness” prevails: risk is concentrated in a few rare, hard (perhaps impossible) to predict, extreme, market events. The fractal mathematics that he invented allow us a glimpse at the hidden sources of apparent disorder, the order behind monstrous chaos. However, as Mandelbrot is careful to emphasize, it is empty hubris to think that we can somehow master market volatility. When one looks closely at financial-market data, seemingly unexplained accidents routinely appear.

The financial markets are inherently dangerous places to be, Mandelbrot stresses. “By drawing your attention to the dangers, I will not make you rich, but I could help you avoid bankruptcy. I am a doomsday prophet – I promise more blood and tears than windfall profits.” Yet, despite those warnings, we continue to search (largely in vain) for methods to the madness.

In what is now a ubiquitous concept, a “black swan” is an extreme event – good or bad – that lies beyond the realm of our normal expectations and which has enormous consequences (e.g., Donald Rumsfeld’s “unknown unknowns”). It is, by definition, an outlier. Examples include the rise of Hitler, winning the lottery, the fall of the Berlin Wall and the ultimate demise of the Soviet bloc, and the development of Viagra (which was originally designed to treat hypertension before a surprising side effect was discovered).

As Nassim Taleb famously pointed out in his terrific book outlining the idea, most people (at least in the northern hemisphere) expect all swans to be white because that is consistent with their personal experience. Thus, a black swan (native to Australia) is necessarily a surprise. Black swans also have extreme effects, both positive and negative. Just a few explain a surprising amount of our history, from the success of some ideas and discoveries to events in our personal lives.

Moreover, their influence seems to have grown beginning in the 20th century (on account of globalization and growing interconnectedness), while ordinary events – the ones we typically study, discuss and learn about in history books or from the news – seem increasingly inconsequential.

For the purposes considered here, the key element of black swan events is their unpredictability. Yet we still try to predict them. We often insist upon it.

I suspect that our insistence on trying to do what cannot be done is the natural result of our constant search for meaning in an environment where noise is everywhere and signal vanishingly hard to detect. Randomness is dismayingly difficult for us to deal with too. We are meaning-makers at every level and in nearly every situation. Even so, as ever, information is cheap but meaning is expensive. And, as Morgan Housel emphasizes, long-tails drive everything.

We like to think that intelligence and effort can readily overcome the vagaries of the markets. That’s largely because we are addicted to certainty and explains, for example, the popularity of the color-by-numbers television procedural, wherein the crime is neatly and definitively solved, and all issues related thereto resolved in an hour (nearly a quarter of which is populated with commercials). As Andy Greenwald explained, these crime dramas are “selling the gruesomeness of crime and the subsequent, reassuring tidiness of its implausibly quick resolution.”

Real life isn’t like that, in that only about half of real life murders are solved.

Randomness is almost everywhere and far more consequential than we tend to assume.

As Cambridge’s John Rapley showed, “economists who’ve actually worked out scientifically what contribution our own initiative plays in our success have found it to occupy an infinitesimally small share: the vast majority of what makes us rich or not comes down to pure dumb luck, and in particular, being born in the right place and at the right time.”

The Nobel laureate Daniel Kahneman’s favorite formula relates to luck and skill: “My favorite formula was about success, and I wrote success equals talent plus luck and great success equals talent plus a lot of luck.” Moreover, “[t]alent is necessary but it’s not sufficient, so whenever there is significant success, you can be sure that there has been a fair amount of luck.”

The reality is that luck (and, if you have a spiritual bent, grace) plays an enormous role in our lives – both good and bad – just as luck plays an enormous role in many specific endeavors, from baseball to investing to poker to winning a Nobel Prize to producing a chart-topping hit record. If we’re honest, we will recognize that many of the best things in our lives required absolutely nothing of us and what we count as our greatest achievements usually required great effort, skill, and even more luck.

If you think you think can control, predict, or forecast the markets, think again. Complexity, chaos, and chance, together with garden variety human foibles, make that effort a fool’s errand.

__________

1 Scientists at the RAND Corporation have developed a set of practical principles for coping with complexity and uncertainty – to give us a chance to learn, to adapt, and to shape the future to our liking. Simplicity and flexibility are key. Complexity is not.

Totally Worth It

This week saw the 200th anniversary of the first performance of Beethoven’s iconic Ninth Symphony.

Feel free to contact me via rpseawright [at] gmail [dot] com or on Twitter (@rpseawright) and let me know what you like, what you don’t like, what you’d like to see changed, and what you’d add. Praise, condemnation, and feedback are always welcome.

You may hit some paywalls below; most can be overcome here.

This is the best thing I saw or read recently. The funniest. The most powerful. The most depressing. The best podcast. Karma. Over-optimization. Good model for universities to follow. Talent. A song created entirely by AI (duh). Winner! Running.

Please send me your nominations for this space to rpseawright [at] gmail [dot] com or via Twitter (@rpseawright).

The TBL playlist now includes more than 275 songs and about 20 hours of great music. I urge you to listen in, sing along, and turn up the volume.

Benediction

This section of TBL typically features a musical benediction, but silence can sing, too.

Mr. Rogers was born 96 years ago last month. Riffing off his famous Emmy award acceptance speech, A Beautiful Day in the Neighborhood, a delightful movie about Mr. Rogers, shows how Fred used silence to inspire gratitude and love.

May we all take a minute to go and do likewise.

As always, thanks for reading.

Issue 173 (May 10, 2024)

I look forward to each and every essay. I’m thankful for the time and effort that you devote to each post. Always thoughtful and thought provoking.

The only prediction about the future that I’m confident about making is that I’ll enjoy Bob’s next newsletter at least as much as I enjoyed the last one.